Introduction

Enterprise technical troubleshooting is a critical but challenging task, requiring efficient navigation through diverse and often siloed data sources such as product manuals, FAQs, and internal knowledge bases. Traditional keyword-based search systems often fail to capture the contextual nuances of complex technical issues, leading to prolonged resolution times and suboptimal service delivery.

To address these challenges, a novel Weighted Retrieval-Augmented Generation (RAG) framework has been developed. This agentic AI system dynamically prioritizes data sources based on query context, improving accuracy and adaptability in enterprise environments. The framework leverages advanced retrieval methods, dynamic weighting, and self-evaluation mechanisms to streamline troubleshooting workflows.

Core Innovations of the Weighted RAG Framework

1. Dynamic Weighting Mechanism

Unlike static RAG systems, this framework assigns query-specific weights to data sources.

• Mechanism: Assigns higher priority to product manuals for SKU-specific queries while general FAQs take precedence for broader issues.

• Impact: Ensures the retrieval of highly relevant information tailored to each query.

2. Enhanced Retrieval and Aggregation

• Uses FAISS for dense vector search, optimizing performance for large datasets.

• Filters results using a threshold mechanism to eliminate irrelevant matches, preventing hallucinations during response generation.

• Aggregates filtered results from diverse sources to create a unified, contextually relevant output.

3. Self-Evaluation for Accuracy Assurance

• Integrates a LLaMA-based self-evaluator to assess the contextual relevance and accuracy of generated responses.

• Only responses meeting predefined confidence thresholds are delivered, ensuring reliability and precision.

System Architecture and Methodology

1. Preprocessing and Indexing

• Data sources (e.g., manuals, FAQs) are preprocessed into granular chunks.

• Embeddings generated by the all-MiniLM-L6-v2 model are indexed using FAISS for efficient retrieval.

2. Dynamic Query Handling

• Queries are embedded and matched against the indexed data using weighted retrieval.

• Threshold-based filtering removes low-confidence matches, ensuring only the most relevant data is retained.

3. Response Generation and Validation

• LLaMA generates responses based on retrieved data, followed by a self-evaluation step to verify accuracy.

Experimental Results

1. Performance Analysis

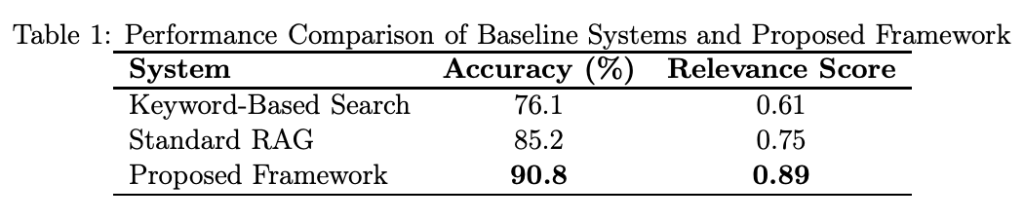

The framework achieved 90.8% accuracy and a relevance score of 0.89, outperforming baseline systems:

• Keyword-Based Search: 76.1% accuracy, 0.61 relevance score.

• Standard RAG: 85.2% accuracy, 0.75 relevance score.

2. Impact of Dynamic Weighting and Filtering

• SKU-specific queries saw significant accuracy improvements due to the dynamic weighting of product manuals.

• Threshold filtering minimized noise, enhancing overall response quality.

3. Self-Evaluation Effectiveness

• Improved response accuracy by 5.6% compared to the baseline RAG framework, highlighting the importance of robust validation mechanisms.

Applications and Future Directions

1. Applications

• Enterprise Support Systems: Enhance resolution times and precision in technical service workflows.

• Conversational AI: Facilitate real-time, contextually aware troubleshooting conversations.

2. Future Enhancements

• Real-Time Learning: Integrate user feedback to refine retrieval and generation processes.

• Multi-Turn Interaction: Enable iterative problem-solving with conversational context management.

Conclusion

The Weighted RAG framework represents a paradigm shift in enterprise troubleshooting, combining advanced retrieval techniques with self-evaluation for unmatched accuracy and adaptability. By integrating context-sensitive retrieval with robust validation, it ensures high-quality, actionable solutions tailored to diverse technical challenges.

This system lays the groundwork for the next generation of intelligent enterprise support systems, paving the way for faster, more efficient troubleshooting in complex environments.

Reference:arXiv:2412.12006